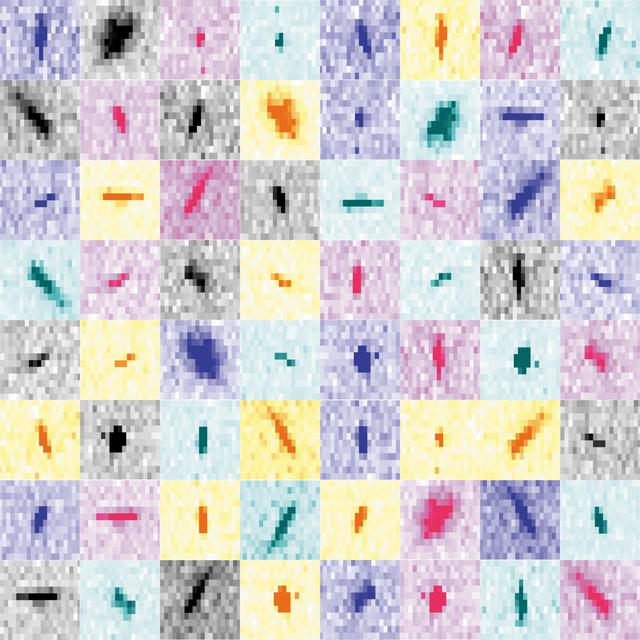

Collage of partial Bragg spots recorded during experiments at the XFEL. For each pixel in the `shoe-box’ of a given Bragg spot, diffBragg applies precise modeling of the various parameters affecting their shape and intensity.

X-ray free-electron lasers (XFELs) came into use in 2010 for protein crystallography, allowing scientists to study fully hydrated specimens at room temperature without radiation damage. Researchers have developed many new experimental and computational techniques to optimize the technology and draw the most accurate picture of proteins from crystals. Now scientists in the Molecular Biophysics and Integrated Bioimaging (MBIB) Division have developed a new program, diffBragg, which can process every pixel collected from an XFEL for a protein structure independently. In a recent IUCrJ paper, the team led by MBIB Senior Scientist Nicholas Sauter proposed a new processing framework for more accurate determination of protein structures.

Scientists use X-ray crystallography to determine the three-dimensional structures of proteins by measuring the pattern of Bragg spots produced by diffracting an X-ray beam through a stream of protein crystals. However, traditional image algorithms do not precisely fit the Bragg spots from X-ray free-electron lasers, and so the full potential of the data is not realized.

“Cutting edge discovery in structural biology has always been about extending the resolution, in both space and time, of what can be visualized,” says Sauter, lead author of the paper. “By extracting more accurate information from XFELs we get a better spatial resolution that reveals more about enzyme mechanisms, and potentially allows us to see tiny changes–less than the length of an atomic bond–to follow chemical reactions over time.”

One longstanding project that has captivated the attention of Sauter and his team has been understanding photosystem II, the key macromolecule in photosynthesis, and how it collects energy from 4 photons from sunlight and channels it into the creation of a single molecule of oxygen (O2) from water. Sauter explained, “XFEL light sources allow us to follow this reaction on a microsecond time scale, and the new data processing offers us a chance to improve the picture from this exceedingly difficult experiment. However, our current work is at the theoretical proof-of-principle stage and will require a dedicated effort to apply the methods to our photosystem II experiments.”

This data processing approach is computational and its success relies on decades of instrumentation development to record the X-ray diffraction pattern at high sensitivity and frequency. “We were able to get an early preview of the latest imaging detectors just now coming online, and they are sensitive to single X-ray photons per pixel, with framing rates of 2 kHz. That amounts to a 10-fold improvement in measurement accuracy and a 10-fold improvement in framing rate, compared to where we were 10 years ago,” said Derek Mendez, project scientist and first author of the IUCrJ article. “This is impressive technology that is complemented by our new approach that can computationally model the data at the level of a single X-ray photon.”

While this work is at a very early stage, eventually it will allow researchers to model more detail in protein crystals. Although the same protein is encased in each crystal, the individual proteins can have many different backbone and side chain conformations, which leads to internal disorder, and is currently difficult to model. diffBragg will also allow scientists to correctly account for correlated uncertainties, for example, one particular pixel might be relatively unreliable and the effect of that pixel in the final model may be minimized.

The MBIB researchers worked with engineers at the SLAC Linac Coherent Light Source (LCLS) in their efforts to improve the data processing pipeline for X-ray crystallography. The team also collaborated with the National Energy Research Scientific Computing Center (NERSC) to implement their approach.

Given that the diffBragg program makes calculations that involve every pixel collected, which is 100 billion pixels per experiment, the computational power that is needed for these types of analyses is rather large. “One of the peer reviewers for our paper took note of the large computational footprint, and wanted to know if there were simpler algorithms that would be more tractable,” said Sauter. “We therefore revised our paper with performance numbers for GPU acceleration, showing that this is truly a problem that can be solved on the Perlmutter supercomputer when it comes on line in 2021!”

The work was funded by the Exascale Computing Project and NIH/NIGMS. In addition to Sauter and Mendez, MBIB researchers involved in this work were project scientists Robert Bolotovsky and Asmit Bhowmick, Aaron Brewster (research scientist), Jan Kern (staff scientist), Junko Yano (senior staff scientist), and James M. Holton (faculty scientist).